AWS: Using the AWS CLI to search within files in an S3 Bucket - Part 1 - Cloudformation

When setting up infrastructure is more straightforward than the 'simple' task you need to perform

Recently as part of my job I needed to help colleagues find some very specific files within an S3 bucket. This post deals with setting up some basic infrastructure to mimic how the original data was generated, and then a script to generate some test data. In the next post I’ll go through how the search script works.

Repository

All of the code and the scripts are available on my Github.

Starting with the project itself, I’ve employed a devcontainer, this is based on the Typescript-Node devcontainer.

The devcontainer.json has been updated at lines 10-13 to pull in the AWS CLI feature and the AWS CDK feature contribution:

"features": {

"ghcr.io/devcontainers/features/aws-cli:1": {},

"ghcr.io/devcontainers-contrib/features/aws-cdk:2": {}

},

This means that the aws cli and cdk will be available in the container. To avoid the need to setup the cli each time the container is built I’ve mapped my local .aws folder to the devcontainer lines (17-19):

"mounts": [

"source=${localEnv:HOME}/.aws,target=/home/node/.aws,type=bind,consistency=cached"

],

The above configuration was put together based on Rehan Haider’s blog post.

Infrastructure

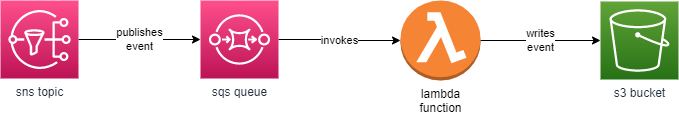

The aim of the stack is to setup infrastructure like the following:

The lambda function uses a javascript function to write incoming events directly to the s3 bucket:

const lambda = new Function(this, 'findansqsevent-lambda',

{

runtime: Runtime.NODEJS_18_X,

code: Code.fromAsset(path.join(__dirname, '/../js-function/findansqsevent')),

handler: "index.handler",

environment: {

bucket: bucket.bucketName,

prefix: "outputFolder/"

}

});

As the lambda is initially run the bucket and prefix environment variables are imported to be used in each execution:

const { bucket, prefix } = process.env;

The function is setup to be able to deal with multiple incoming messages, cycling through all of them, and PUTting them one at a time in the S3 bucket:

for (const { messageId, body } of event.Records) {

console.log('SQS message %s: %j', messageId, body);

const command = new PutObjectCommand({

Body: body,

Bucket: bucket,

Key: prefix.endsWith('/') ?

`${prefix}${messageId}.json` :

`${prefix}/${messageId}.json`

});

const response = await client.send(command);

console.log('S3 response: ' + JSON.stringify(response));

}

The stack should first be built using the command npm run build. Once this has completed it can de deployed with cdk deploy. This assumes you have the aws cli and cdk installed, and configured.

Data generation script

The repository includes the `generate-messages.sh shell script that publishes events.

The script takes two parameters:

-t|--topic <value>- the arn of the topic deployed-i|--iterations <value>- the number of events to generate

The script uses the aws sns topic command to publish events to the sns topic created by the stack above:

aws sns publish --topic-arn $topic --message "$value" > /dev/null

The output of the aws sns publish command is redirected to /dev/null to keep the output clean. The output looks similar to the following:

{

"MessageId": "123a45b6-7890-12c3-45d6-333322221111"

}

For this use case I’m not too concerned with the message id, however it may be important to you when publishing messages.

In order to run the script you need to set the execute permission, the following command will do this for the current user only: chmod u+x generate-messages.sh

To be able to run the script you will need the aws cli installed and configured.